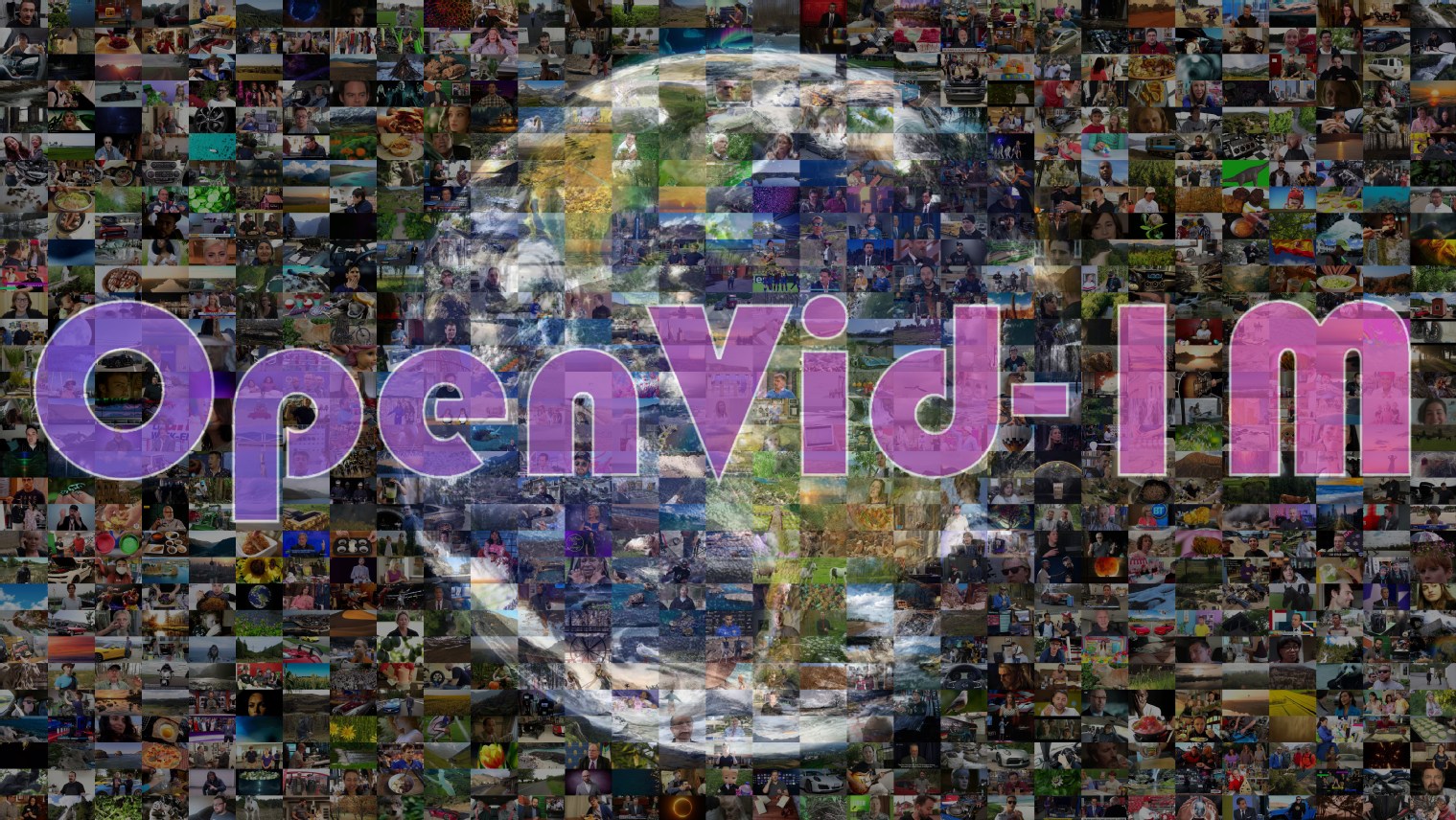

A Million-Scale High-Quality Text-to-Video Dataset and Generation Model Accepted by ICLR 2025

The task of text-to-video generation has recently gained widespread attention with the emergence of large models such as Sora and Kling. However, two major challenges remain: (1) the lack of accurate, open-source, high-quality datasets, and (2) the predominant use of simple cross-attention modules in most models, which fail to fully exploit the semantic information from text inputs. To address these issues, the team led by Associate Professor Tai Ying from the School of Intelligence Science and Technology at Nanjing University has developed OpenVid-1M—a million-scale, high-quality text-to-video dataset—and proposed a novel video generation model, MVDiT, which effectively leverages the structural and semantic information from both visual and textual inputs.

As of March 2025, OpenVid-1M ranked first on the Hugging Face trending video datasets chart, with over 210,000 downloads. It has been widely adopted in various research areas, including video generation (Goku, Pyramid Flow, AnimateAnything), long-form video generation based on autoregressive models (ARLON), visual understanding and generation (VILA-U), 3D/4D generation (DimensionX), video variational autoencoders (IV-VAE), video frame interpolation (Framer), multimodal foundation models (VideoOrion), and video colorization (VanGogh). This work has been accepted by the International Conference on Learning Representations (ICLR) 2025, one of the top-tier conferences in artificial intelligence.

Figure: Illustration of the OpenVid-1M Dataset and the Architecture of the MVDiT Model

Enhancing Text-to-Video Generation with Instance-Aware Structured Descriptions (Accepted by CVPR 2025)

Despite recent advancements in text-to-video (T2V) generation, the field still faces two major challenges: (1) existing video-text pairs often suffer from missing details, semantic hallucinations, and vague motion features; and (2) conventional methods struggle to achieve fine-grained alignment at the instance level, resulting in insufficient instance fidelity in generated videos. To tackle these issues, the team led by Associate Professor Tai Ying from the School of Intelligence Science and Technology at Nanjing University proposed InstanceCap, the first instance-aware structured captioning framework for T2V generation. Alongside this, they constructed InstanceVid, a high-quality dataset with 22,000 video-text pairs, and developed an inference-enhanced generation pipeline tailored to structured descriptions.

Specifically, the InstanceCap framework introduces several key innovations: 1) Auxiliary Model Collective (AMC) Paradigm: By integrating object detection and video instance segmentation, it decomposes global video content into independent instance units, significantly enhancing instance-level fidelity;

2)Improved Chain-of-Thought (CoT) Reasoning Guided by Multimodal Large Language Models (MLLMs): This process refines dense captions into structured statements containing instance attributes – background details – camera motion.

3) Motion Intensity Filtering Mechanism: Used during the construction of the InstanceVid dataset to ensure each video contains at least one high-motion instance.

Experiments demonstrate that InstanceCap significantly outperforms baseline methods such as Panda-70M in video caption accuracy. Furthermore, T2V models fine-tuned on InstanceVid exhibit a 57.17% improvement in instance generation quality compared to baseline models. The InstanceCap framework is fully compatible with mainstream multimodal large language models. This work has been accepted by the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2025, a top-tier conference in the field of computer vision.

Figure: Schematic Diagram of the InstanceCap Framework

Ultra-high-definition (UHD) image restoration presents a series of formidable challenges due to its inherent high resolution, complex content, and detail richness. To address these difficulties, the team led by Associate Professor Tai Ying from the School of Intelligence Science and Technology at Nanjing University introduces a novel perspective on UHD image restoration through progressive frequency decomposition. The task is divided into three gradual stages: Zero-Frequency Enhancement, Low-Frequency Restoration, and High-Frequency Refinement.

Based on this analysis, the team proposes ERR, a new restoration framework composed of three cooperative sub-networks:

l Zero-Frequency Enhancement module (ZFE), which integrates global priors to learn comprehensive mapping relationships;

l Low-Frequency Restoration module (LFR), which focuses on recovering coarse image structures by restoring low-frequency information;

l High-Frequency Refinement module (HFR), which incorporates a proposed Frequency-Window Kolmogorov–Arnold Network (FW-KAN) to refine textures and details with high fidelity.

Experimental results show that the ERR framework significantly outperforms existing methods across multiple UHD restoration benchmarks. Extensive ablation studies further validate the individual contributions and effectiveness of each module.

This work has been accepted by the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2025, one of the most prestigious conferences in the field of artificial intelligence and computer vision.

Figure: The Proposed ERR Framework with Frequency-Domain Decoupling